Dirty Physics, Distilled Empiricism

Michael (M.F.) Ashby and the Empirical-Analytical Continuum

“Over the past 100 years, the understanding of materials at the atomistic and electronic level has increased immensely. Diffraction of radiation and of particles has elucidated structure. Quantum mechanics has explained the nature of bonding and predicted the magnitude of properties such as Young's modulus. Statistical mechanics has provided the fundamental basis for reaction-rate theory, and allowed the modelling of structural change (the heat-treatment of steels, for example). Defect theory - particularly the theory of point defects, of dislocations and of crystal boundaries - now gives a detailed picture of the atomistic aspects of deformation, of creep and of fracture. However, all this has had remarkably little direct influence on design in mechanical engineering.”

Michael Ashby, on Predictive Materials Design in 1987

On April 30, 1993, four years after publishing a proposal for “an idea of linked information systems,” computer scientist Tim Berners-Lee (a fellow at CERN, Switzerland) released the source code for the world’s first web browser and editor. Originally called Mesh, the browser that he dubbed WorldWideWeb became the first royalty-free, easy-to-use means of browsing the emerging information network that developed into the internet as we know it today.

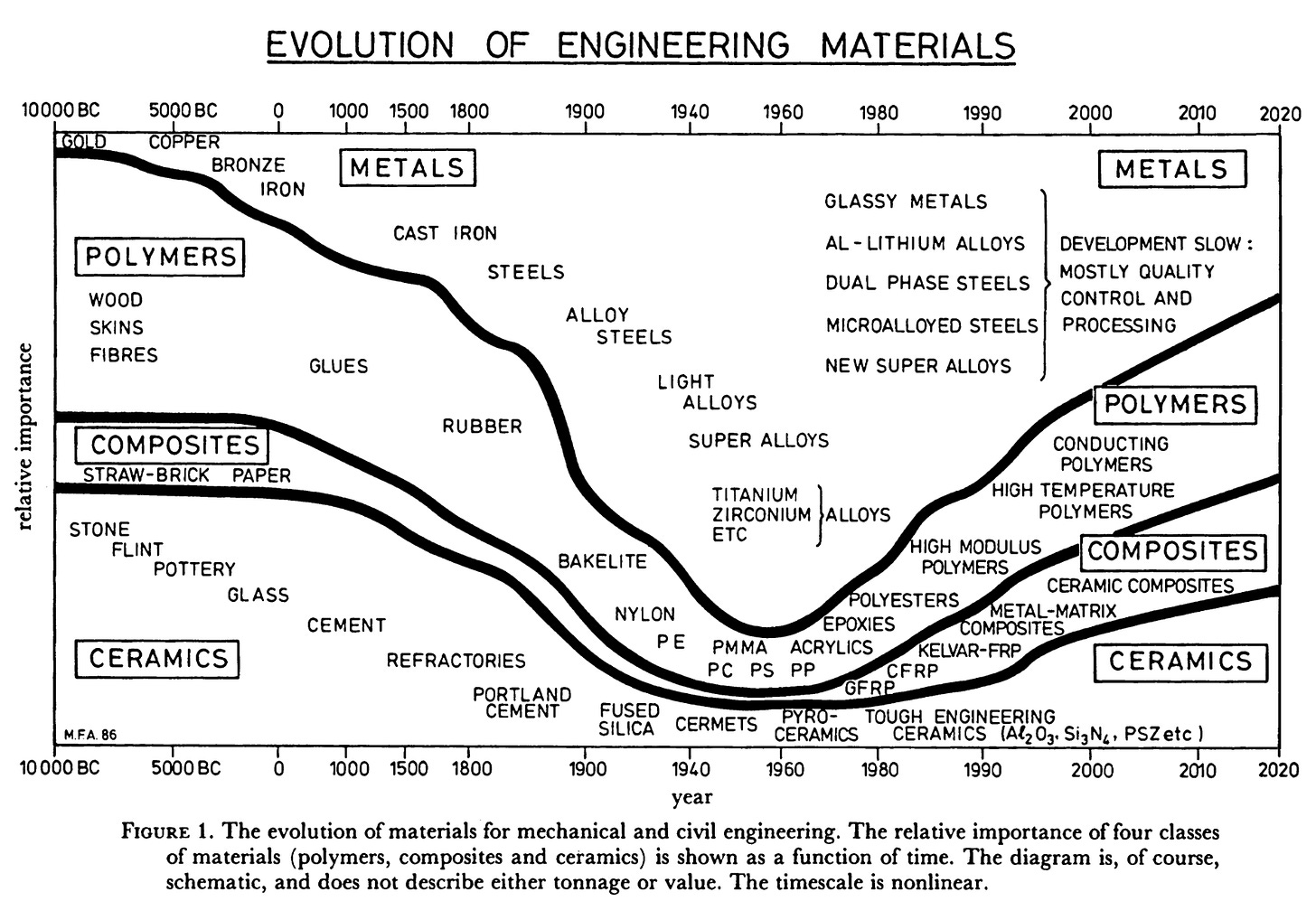

Seven years prior, at Cambridge University on Trumpington Street, a group of eight, all members of the Royal Society in London, held a symposium on the theme: Technology of the 1990s. These men were Michael Farries Ashby, Stephen F. Bush, Norman Swindells, Ronald Bullough, Graham Ellison, Yngve Lindblom, Robert Wolfgang Cahn and John. F. Barnes. The steel industry was declining, in favor of other classes of materials– like high-strength polymers, ceramics, and structural composites– and they were concerned that another material revolution was approaching with discontinuous, rapid changes leading to disruption and resultant social and economic problems. Their octet desired more practical engineering solutions to keep up with the oncoming era of advanced materials.

During that same year across the Atlantic in Warrendale, Pennsylvania, the inchoate idea of intelligent processing of materials took shape in the minds of Haydn Wadley, Eugene Eckhart, Jr., B.G Kushner, P.A Parrish, B.B Rath, and S.M Wolf. Let us skip the history of their ideas, and focus attentively on the history of design.

Early mechanical design was empirical in that you tried something and if it worked, you used that method again; if it did not, you moved on to the next thing, ultimately resulting in a vast store of empirical knowledge at present. Some may say this is one of the foundations of current practice. Evolving paradigms of mechanical design, however, comprise new sets of tools to account for the increasing reliability necessary for instantiating complex assets. You may class one of these tools under mathematics + continuum modelling. Since materials obey certain experimentally observed rules– thermodynamics, rate theory, and so on– continuum theories of heat flow, diffusion, reaction rates for instance, can be derived by manipulating the simplified results of the vast amounts of experimentation using mechanical methods.

Although powerful, continuum design has limitations in that it provides a description of material response to certain stimuli. Prediction, especially to new forms of stimuli, is a whole different beast. These abilities, nonetheless, have been extended by computer power, the second leg of the tripod, in optimization– to minimize cost, maximize performance, and ensure safety.

The third foundation of current practice can be described as atomistic modeling through diffraction to elucidate structure, quantum mechanics to explain the nature of bonding, statistical mechanics as the basis of reaction-rate theory + structural change, and defect theory to explain deviations from theoretical physics, all of which up until recently, had remarkably little direct influence on design in mechanical engineering.

It does make sense why this is the case. The microscopic parameters used in atomistic modelling, can be measured at will, but are not practically useful in the routine high-throughput manufacture of widgets. Moreover, they are simply not known with useful precision in any real engineering material. Imagine needing molecular vibration frequencies, mobile dislocation densities, interatomic potentials and impurity concentration– parameters that vary widely across engineering materials.

Why continuum theory is useful is that it reduces the number of independent variables via grouping, constraining the design and vastly reducing the number of experiments necessary, thereby guiding you more efficiently to the optimum design. Of course, where empiricism plays a role is in fine-tuning. The “computational complexity” of course increases as the conditions under which the material can be used becomes more severe, as these require a higher level of optimization. When more properties are involved, difficulties arise as conditions become more stringent. For instance, a spring required to operate at high temperatures may suffer from creep, or fatigue because of cyclic stresses. When failure modes interact, superposition is necessary– a non-linear relationship between parameters results in the breakdown of these constitutive equations.

Atomistic modelling, though, is not the panacea it is purported to be because it breaks down in the precise knowledge of certain microscopic variables that cannot easily be measured ( like grain boundary diffusion rate or jump frequency). But these models have something else which is useful, in that they have certain broad features which point to rules that constitutive equations must obey– properly interpreted metarules which help us group variables in new ways, reducing the number of independent variables. For instance, since creep and oxidation are thermally activated, we can infer a rate-dependence on temperature via statistical mechanics. The underlying idea, though, is that although atomistic models do not lead directly to precise constitutive laws, they suggest the form these laws should take and place close limits on the values of the physical constants that enter them.

The happy medium is one that adapts all three foundations– model-informed empiricism. The models suggest forms for the constitutive equations, and for the significant groupings of the variables that enter them; empirical methods can then be used to establish the precise functional relations between these groups. The result is a constitutive equation that contains the predictive power of atomistic modelling with the precision of ordinary curve-fitting. In parallel, the broad rules governing material properties can be exploited to create and check the database of material properties that enter the equations.

“You might think all this so obvious that it hardly needs saying, but its potential contribution to mechanical engineering is only just being recognized and dignified by the title of intelligent design and processing”

From these axioms, one can then envision the realization of the ideal engineering workstation, which J.H Westbrook and H. Kröckel describe as computerized materials information systems, in response to the highly entropic field of advanced materials whose properties and combinations of properties required for the next generation of products– shall we call them assets– are often new, and are impractical to standardize on a global scale as opposed to a case-by-case basis. These new materials (and material systems) are often not homogeneous, monolithic, and off-the-shelf, but are rather ad hoc tailored compositions, micro- and macro-scale composite structures with strongly process-related properties; and there is increasing insistence by designers on property values of designated reliability.

The challenge is then to accumulate data - numeric, tabular and graphic - from diverse sources, convert it to machine-readable form with a harmonized array of metadata descriptors and present the resulting factual database(s) to the user in a convenient and cost-effective manner.

Among the categories of applied research information that have a pronounced sensitivity to socio-economic factors are the properties of technically relevant materials. Factual data on materials and their properties are the most condensed form of materials information needed in engineering technology, and it is not surprising that they are considered as vital resources for the competitiveness and innovational capacity of industry. This critical potential of materials information has actually been widely perceived and has given rise to worldwide efforts driving the enhancement of generation, evaluation and dissemination of materials data and knowledge.

Science and technology may have ultimate benefit from the development of a new category of information source, the knowledge graph, which uses identical hardware but incorporates special algorithms from the domain of artificial intelligence. Such knowledge-based systems open the possibility for computer-assisted intelligent evaluation, advice, reasoning and decision-making, and this is just the tip of the iceberg. Because such capabilities will also be required by the user interfaces of factual bases, and vice versa because knowledge bases also need data, the future is likely to bring us various combinations of these concepts.

Depending on the degree of sophistication one wishes to use in the analysis, a single property of an engineering material determined by a typical material test can be shown to be influenced by several dozen parameters that have their origin in the characteristics of the material and its production processes, the test method and conditions, the testing controls and environmental parameters, the specimen characteristics, and in various technical, commercial and standard conditions. Collectively, these descriptors of the numeric property data themselves are known as metadata, data about data. The ways in which the physical and technical parameters influence each other and the property under consideration are complex and sometimes even poorly known, in addition to the problem of their quantity.

Unlike the fundamental scientist who tends to simplify and isolate effects to study causalities, the engineer lives with a pragmatically optimized multiparameter system. His materials are not only subject to physically accessible factors, but also to those relating to production technology, trade, legislation, safety and standardization. The way in which these complexities affect the flows of data and knowledge to the ultimate engineering user is shown schematically below, which also differentiates between raw, validated, catalogue, standard and evaluated data.

An adequate formal description of a computerized materials data system, or of a relevant, self-consistent subsystem satisfying the information requirements of a limited technical application, therefore requires much analytical effort. The resulting complexity has usually made the computer implementation of engineering material data systems very demanding, and finally expensive, enterprises. The possibilities of data retrieval depend on the quality of the structural design, the structured coding system and the database management system. The level of sophistication of these components, however, also affects the cost of data banks, a factor that must ultimately determine their creation or continued existence. It must be clearly realized that high costs are not only involved in their construction but also in their operation, in particular in the input and output interfacial processes. The problems and costs caused by the formatting and input of data often prevent the commercial feasibility of a computerized database. Equally there are many unsolved problems at the data output end of the system where communication with materials specialists or engineering users takes place. The development of intelligent access procedures and query languages, which relieves untrained users of the need to acquire detailed system knowledge, is far from a satisfactory status. The resulting poor usage, in combination with the high cost of the data input process, causes, for many systems, a prohibitively bad cost-effectiveness.

Certain user needs must be satisfied to make this feasible for both ends of the pipeline:

Comprehensiveness

Structured metadata

Data reliability

Benchmarking

Ease of system use

Versatile, integrated capabilities to streamline input and output workflows

The most severe handicap for the development of factual materials data systems and information networks for an international scope of operation is the inadequate level of agreed, harmonized standards for materials, tests and properties– benchmarks, if you will. It is obvious that the intermediary function that data storage in a computerized database has between an environment that generates data and an environment that uses them makes the use of unambiguous terms and descriptors necessary. The call for a worldwide multilingual standard terminology for the materials field including definitions, symbols and abbreviations is therefore brought forward by materials data banks stronger than ever, as is the general need for clear standards in materials designations and numbering systems as well as test methods that define the properties to be processed.

From materials information systems, a logical transition, of course, lies in the implementation of materials information networks, with the goal of making them extremely useful and accessible to the practicing engineer. As many engineering systems impose an extreme level of reliability on the materials used, constitutive rules and metarules must ensure conformance in such a way that:

effects considerable compression of data from diverse combinations of independent test variables to a simple, concise representation of the behavior of a given material;

provides an analytical formulation of the material behavior for input to stress analysis, for example, by finite element methods

enables life prediction of components or systems

helps verify theories of the behavior of matter

permits interpolation, and sometimes extrapolation, from the experimental data to include conditions not physically tested

helps identify regularities and patterns in data leading to new models and new theories

helps guide experimental research in fruitful directions, often reducing the number of test measurements required

facilitates the simplification of an experimental program and hence affect economics in development, by making possible computer simulation of materials behavior across length, time, and other parameter scales.

Of course, the logical leap from materials information networks (predicated on the aforementioned foundations) is the proliferation of dynamic (quantum mechanically accurate) data banks, which self-correct based on experimental validation. Further evolution of this system into integrated materials design, will culminate in knowledge-based systems, which emulate human reasoning to solve complex technical problems. This is my idea of material intelligence– massively parallel multiplayer multiparameter materials design systems which serve as the ideal self-consistent engineering workstation.

The way cloud interacts with land i.e the digital world interacts with the physical world can be classed into three paradigms, as pertaining to materials–

input (digitalization of the physical world, parameterization)

experimentation (generating reliable materials data, simulating behavior)

output (instantiating a digital asset in physical space)

Subsequent publications will be necessary to delineate the ideal technology stack, from rendering systems to control systems, all the way to the matter compiler. At such a time will more clarity be provided as to what material intelligence actually means.

On the idea of speeding up the three foundations and three paradigms arises the concept of autonomous materials discovery via materials acceleration platforms. Good work is being done by the Materials Genome Initiative in the US, and the Acceleration Consortium in Canada.

This is truly the stuff of stars. What a time to be alive!

Edward, a brilliant vision of how material engineering may progress. I am more than a little impressed.